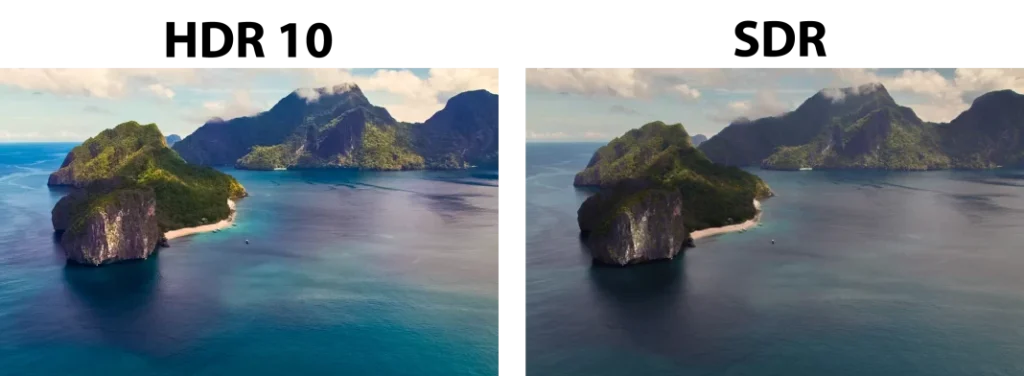

I will tell you in simple words what HDR is for TVs. HDR (extended color range) appeared on TVs in 2016. It was just when TVs with displays with 10-bit color depth capable of supporting a billion shades started to be released.

A situation arose where standard video, commonly called SDR, could technically not transmit information with a color depth of 10 bits. The TV, which has a high-end screen, showed only a tiny part of the possible color gamut. HDR was developed to expand the possibilities of displaying high-quality video and support the display of high-quality video games on the TV set.

To realize the possibility of showing quality video, it was invented to transmit metadata in the video stream, and the TV, having received this metadata, should properly process the video.

Generations of HDR

As is usually the case when something new comes along, many companies and associations have started to develop HDR formats, so there are now several generations of HDR that are used in TVs.

| HDR Generation | Color Depth | Brightness (nits) | Metadata | Features |

|---|---|---|---|---|

| HDR10 | 10-bit | Up to 1000 | Static | Basic standard, one setting applied for the entire video |

| HDR10+ | 10-bit | Up to 4000 | Dynamic | Enhanced version of HDR10, adjusts brightness and contrast for each scene |

| Dolby Vision | 12-bit | Up to 4000+ | Dynamic | Supports 12-bit color depth, requires compatible hardware |

| HLG (Hybrid Log-Gamma) | 10-bit | Depends on display | No metadata | Designed for broadcasting, compatible with both SDR and HDR displays |

| Advanced HDR by Technicolor | 10-bit | Up to 1000 | Dynamic | Offers flexibility in content production and broadcasting |

How does HDR work?

HDR increases the color range, which is nothing but the brightness and contrast of your TV. Simply put, the transmitted content must play on old and new TVs. You can’t simply increase the brightness level in the transmitted signal, as this will distort the video on non-adapted screens. That’s why metadata was invented, which predetermines the settings of TVs.

In the first HDR 10 format, the metadata was only at the beginning of the video; if the TV is informed of the presence of metadata and supports HDR, it starts processing the video with 10-bit color depth; if HDR on the TV is disabled or the video does not contain metadata, it is processed by standard technology as is. The downside of HDR10 was its primitiveness and lack of scene-specific adaptation, as bright scenes must be handled differently than dark scenes. Subsequent formats already work at the scene level; considering these features, HDR10+ and Dolby Vision already support dynamic metadata.

And now for an example of how HDR works: you’ve enabled HDR on your TV and started watching an HDR-enabled movie. The TV, having detected the presence of metadata in the received content, launches special programs to process the video taking into account the metadata. When a particular scene is shown, the metadata contains information about the contrast and brightness levels that should be applied to the colors in a particular scene. If the scene is dark, bright objects will be slightly darkened, and objects with low brightness but that should be visible will be brightened. The result is a higher-quality image.

You should also be aware that the TV in HDR mode will consume more power, mainly due to the need for the processor to process the received video, which entails an increased load.

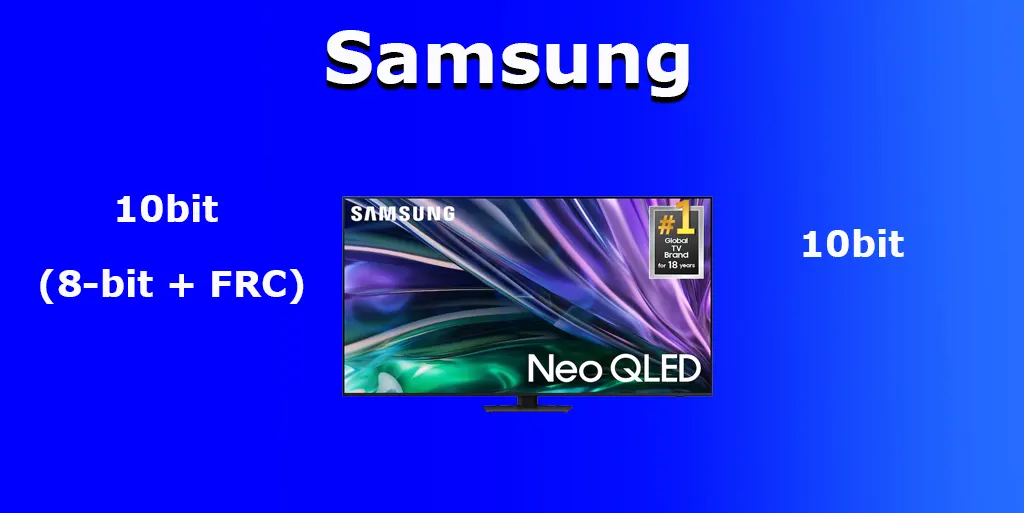

Do all TVs support HDR

You should know that while all TVs support HDR on a conditional level, not all TVs actually support HDR. For example, TVs with displays that have a color depth of 8 bits cannot display HDR content. In addition, the HDR specification predetermines that only devices with screens that have a color depth of 10 bits are HDR compliant. Therefore, HDR works on OLED TVs and QLED TVs with 10-bit color depth. But on LED-TVs and QLED-TVs that use dithering or FRC to increase the number of colors, HDR does not work to the fullest extent, or simply put, practically does not work.